These days, people talk a lot about ‘the art of hosting’, but that is only one half of the dance of hospitality: there is an art of guesting, too. I can say this with conviction, because I have been a lousy guest, in my time, and lately I’ve had the luck to live with someone who teaches me to notice the things that make a person easy to have around: the moments at which an artful guest steps forward with a gentle insistence, the moments of well-timed withdrawal. Perhaps because he is one of life’s wanderers, Christopher Brewster has mastered this art. If I picture him now, it is standing in our kitchen, chopping vegetables at speed and maintaining an unbroken conversation while preparing a lunch that will show the influence of the years he lived in Greece.

He could be a classicist or a philosopher—either of those things seems more probable, from his manner, than that he should be a computer scientist working in a business school. In fact, as he touches on near the start of this conversation, his route into computer science came through the philosophy of language, and it was his feel for the ways of thinking embedded in digital technology that suggested the theme around which we wandered together.

It was the spring of 2014, my first year in Västerås, a middle-sized city by a lake in central Sweden. A string of friends had invited themselves to visit, so that I had taken to telling people, ‘We’re running a residency programme in our spare room.’ It occurred to me that a residency programme should really include a public events series, so one afternoon, I wandered into the local branch of the Workers’ Learning Association and a week later sixteen of us were gathered in their foyer for the first of what became eight weeks of Västerås Conversations. Our first guest was Anthony McCann, talking about tradition, the commons and the politics of gentleness. From week to week, certain themes would loop back: hospitality; friendship; the challenge of speaking up for things that are hard to measure or adequately define.

What follows is an unfaithful transcript of the fourth of those conversations. We have taken the opportunity to straighten out our more crooked sentences and to fill in details where memory failed us at the time. Still, what emerges from this process should be read as a sample from a larger conversation: both the relay of stories and ideas that ran over those eight weeks in Västerås and the ongoing conversation that is my friendship with Christopher.

It is a friendship that began at one of the handful of conferences I have been to where people seemed to be there to listen to each other; a conference whose theme was ‘the university in transition’ and the possibility of new, radical spaces of learning growing at the edges of a dysfunctional system. It seems appropriate, then, that our friendship should have become a strand in a web of convivial conversations that form a kind of ‘invisible college’ in which many of us have found nourishment over the years since. This text and the other Västerås Conversations are among the visible manifestations of that web.

DH: If we’re going to talk about the measurable and the unmeasurable, perhaps we could start with this observation: to a computer, the world is made up of numbers, while to a human being, that is not the case. Yet the more inseparable they become from our lives, the more likely we are to fall into ways of thinking which do treat the world as made of numbers, even though this is not our experience.

Now, do you agree with that as a starting point?

CB: As a starting point, yes, but the story is more complex. When I first set out on my PhD, I remember meeting a man who said, ‘Wouldn’t it be nice if we could make human language behave like a programming language—if we could reach that level of precision?’ And my response is that this would be a complete loss, because it’s exactly the ‘failures’ of human language that are its virtue. So the fact that human language is ambiguous and vague is a feature, rather than a bug.

Computers are precise. It’s the digital nature of computers that gives the particular effect that we observe. They require everything to fit into either-or categories. This goes back into the intellectual heritage of computer science, which originates partly in logic and partly in mathematics. And because of this logical heritage which is physically embodied in the transistors and the physical hardware, it has been natural for computer scientists to wish to analyse the world in logical terms. They have been deeply influenced by thinkers like Frege and Carnap who believed that one could represent the world completely in logic. That remains to this day a fundamental, if very often tacit, assumption about all activity in computer science, whether at the theoretical end or in the development of business systems where you believe that you can have an enterprise resource management system that can put people into different categories and then describe the world in a perfect manner.

DH: So what you’re saying is that the way that computers represent the world has a heritage in particular areas of philosophy—particular ways of thinking about the world that are by no means the only ones available. Yet since these machines are now everywhere and intertwined with our lives to such an extraordinary extent, they carry these ways of thinking into our lives, they shape the way we see the world and the way that we reshape the world?

CB: Yes. Each computer, each system we use, each piece of software embodies a particular model, a particular perspective on the world—and we tend, in the end, in using these systems to believe the model rather than the world. Whether it’s an accounting system or a personal fitness management system, what happens is that you create a set of categories, a model, and then either things fit or, if they don’t, they tend to be ignored.

DH: And when you’re interacting with these machines, you either agree to act as if the world is like that, or the interaction with the machine quickly becomes difficult. So, to the extent that we spend a lot of our time dealing with these machines, we have to spend that time agreeing to pretend that the world is more logical and more capable of being reduced to things that can be measured than the full range of our background experience might suggest. And for as long as our focus is held by the machine, that way of seeing the world is being affirmed.

CB: Part of the problem here is the plasticity of the human mind: our own native ability, agility, flexibility to deal with things.

Several years ago I was a reviewer on a research project that concerned the analysis and production of emotions by computers. The idea was that you would construct computer systems that could detect emotions in the voice or gesture of people and that would then produce something that would represent an emotion. They had an example, a system which would have an artificial tree on a screen, and if you spoke to it nicely it would grow and if you spoke to it harshly it would slowly shrink. And they were terribly pleased with this because they said, ‘Look, we have managed to capture human emotion and analyse it in a way so that the tree grows when people are nice and happy and positive…’

DH: And it will shrink if people are being nasty?

CB: The trouble is, they didn’t really think about the likelihood that human beings would observe the tree and, within seconds, respond appropriately and figure out how to control the tree. So it had nothing to do with your real state of emotions, but technically it was a brilliant success!

DH: So, to go back to that conversation from when you were starting out on your PhD, there is this utopian ambition—which people who are enamoured of the ways of thinking embedded in these machines tend towards—which is a belief that the world would be better if we could get rid of the vagueness and achieve the same kind of precision that these machines are capable of?

CB: Well, this tendency has deep intellectual roots. You can trace it back to Descartes and the Enlightenment, or all the way back to Aristotle’s attempt to enumerate all the possible kinds of things within a fixed set of categories. There has been a repeated attempt throughout western intellectual history to develop a total system of categories that will cover all of human experience. My favourite example is in England, in the mid-17thcentury, when you have people like Francis Lodwick with his project for A Perfect Language. There was a whole movement of ‘philosophical languages’ and at the centre of it was John Wilkins, one of the founders of the Royal Society, who produced this extraordinary taxonomy of every known scientific concept. He founded the Royal Society to ensure the longevity of his project and when he had produced his taxonomy, he told everybody, right, now you have to keep it up to date! And, of course, everybody ignored it. But the tendency continues—and the next step, in the 18thcentury, is Linnaeus from Sweden who produced a taxonomy of all animals and plants, the foundation of the modern biological naming system. So you have a growing sense that, yes, we can describe the world completely.

DH: We can know the world by having a box for everything!

CB: In the late 20thcentury, there are innumerable failures. There’s a wonderful project which began in the mid-80s called Cyc which has put hundreds of man-years of work into constructing a logical system to represent the whole of the world. Now, there are some fantastic examples of people trying to use the system and failing completely, yet it carries on—it’s a recurring human ambition.

And of course, if you pin a computer scientist to the wall, they will say ‘Well, no, of course we don’t mean to describe the whole world.’ But you see it creeping out in all kinds of ways, even if it’s just a small corner of the world, even if there’s an acknowledgement that we won’t describe the wholeworld in our set of categories—there is still an assumption that those categories will represent reality perfectly.

The other thing to say is that there is a lot of money riding on this assumption. If you want to find the current version, it goes under the name of ‘smart cities’ or the ‘Internet of Things’, a vision of a world in which everything we interact with is connected to the network. But it has consequences, socially, that people have not yet fully worked out, because what it means is that we are trying to construct systems that will model every aspect of reality. And you need to tell your story of trying to use the electronic toilet…

DH: Yes, this is about what that interconnected vision looks like in practice. It was six weeks ago. I was at the railway station in Borlänge—a small city in Dalarna, which is Sweden’s answer to Yorkshire—and I needed to use the toilet. There was a toilet there, it was vacant, and there was a keypad on the door. To open the door, you had to put in a code and a notice on the wall explained that, to get a code, you needed to pay 10kr by sending a text message to the number provided. So I sent a text message to pay my 10kr. After a couple of minutes, I got two replies. The first was from the toilet company, telling me my payment had failed. The second was from my Swedish mobile provider, explaining that, in order to make the mobile payment, I needed to download an app. So now I had to get online, find the app, download and install it, then give it my bank card details and approve the safety request from my bank, allowing me to transfer 10kr from my current account into the ‘virtual wallet’ that now existed on my phone and, presumably, somewhere out there in a data-centre. At which point, I could send the text message again, pay my 10kr and get texted back with a code that would open the door—and finally, after twenty minutes, I had access to the toilet. In among all this technological wizardry, the real miracle is that I had managed to perform all these tasks whilst keeping my legs crossed.

CB: So, this is a perfect example of smart cities! And one consequence is, now the mobile phone company and the app company and possibly the company operating the toilet door all know who has gone to the toilet and when!

DH: I guess so—or maybe there is some way in which the controlling of access to the toilets is saving the local council money? But I wonder if it’s really that rational, or if it’s just that the idea of the Internet of Things sounds so shiny and new, and whoever was responsible for deciding that the bathroom of Borlänge railway station should be part of it couldn’t envisage the reality of how it would turn out, the reality of the Internet of Toilets?

I guess what I’m asking is, did anyone ever know what the problem was that this was meant to solve? And maybe we could extend this to the whole project that you’ve been describing, this recurring project to come up with a complete set of categories for describing the world—is it a problem that we don’t have a complete set of categories like that?

CB: I think it’s very important that we don’t have that set of categories! I’d argue that it’s actually a positive thing.

But I think what we’re getting to now is this concept of ‘legibility’, which comes from James C. Scott’s book, Seeing Like A State. And this construction of categories is part of the need that organisations in authority have to describe their territory, to describe their universe. That’s where the ‘problem’ comes from.

DH: Yes, Scott’s concept of legibility, that the modern state has a great desire and demand for the ability to see and read activity of all kinds from above. He’s talking about the kind of centralised political systems that came about in the past two or three centuries in Europe and were gradually extended across the world. To meet this demand, you need to standardise things, to reduce the complexity to a manageable model. And the classic example that Scott uses is forestry in Germany.

CB: So in the 18thcentury, in Prussia and Saxony, forests were a major source of income to the princely states. They developed ‘scientific forestry’ as an attempt to rationalise the revenue: new measurements were developed, trees were categorised into different size categories and their rates of growth were charted, so that the output of the forest could be projected into the future. But everything else that made up an actual living forest had vanished from these projections, all the other species, all the other activities—as Scott says, they literally couldn’t see the forest for the trees. Then, in the next phase, they began planting ‘production forests’, monocultures with trees all the same age, lined up in rows. And of course, with our ecological perspective, we know where this is heading: as the soil built up by the old growth forest becomes exhausted, the trees in the new rationalised forests no longer grow at the rates projected, the forest managers get caught up in needing fertilisers and pesticides and fungicides, trying to reintroduce species that had been driven out, struggling to reproduce something like the living forest that their way of seeing had destroyed.

DH: And at the root of this, there’s a ‘problem’ that was only there from the perspective of the office at the centre of a huge area of territory that wants to be able to ‘know’ what is going on everywhere and then improve the numbers in the model that has come to stand for the forest. There was no ‘problem’ from the point of view of the old-growth forest, or the people who were actually living and working there.

CB: Scott uses the concept of legibility to explain a number of seemingly disparate phenomena. Things like surnames, which only arrived in some parts of Europe as late as the Napoleonic period—they give the state a way of knowing who is who, how many people there are in a population, so that they can be taxed or conscripted effectively. Standardised weights and measures—the metric system was invented in France at the end of the 18thcentury and then gradually imposed upon the whole of Europe, replacing measures that varied from one market town to the next. The introduction of passports is another example. And you see this desire for legibility, too, within companies. The whole concept of Taylorisation and time-and-motion studies is another form of increasing the ability to read activity from above.

Now, one of the origins of computing is in the construction of machines to do some of this work. It took seven years to process all the data that had been collected in the 1880 census in the United States. Between 1880 and 1890, the population was growing so fast that, not only was the data from the last census out of date, it was reckoned that it would take 13 years to process the data from the next census. But right on time, you get the Hollerith machine, a punchcard database system that allows all the data to be processed within six weeks.

That was a huge improvement from the point of view of the state’s ability to understand what’s going on—and it fits right into the story that we’ve been talking about of the intellectual origins of computers in the desire to measure, observe, count and categorise.

But we mustn’t only view this as negative, it has produced wonders.

DH: I think this is why I wanted to frame this conversation in terms of ‘the limits to measurement’, rather than ‘the problem with measurement’. Because you’re right, however anarchistic our instincts might be, I don’t know many people who would honestly choose to forego all of what the state provides for them. I remember Vinay Gupta saying, ‘I’ve stopped worrying about the power of the state, I’ve started worrying that the state is going to collapse before we’ve built something to replace it.’

And I remember the first time I tried to speak about this question of the measurable and the unmeasurable, realising that there were people who heard what I was saying as an argument against measurement. Actually, what I would like to bring into conversation is this: under what circumstances is measurement helpful and appropriate? Under what circumstances does measurement become problematic? And how do we develop a language for talking about these things more subtly?

And perhaps one way of approaching that subtlety is to bring in an idea from Ivan Illich. In his early books, in the 1970s, he talked a lot about counterproductivity and ‘the threshold of counterproductivity’: the point beyond which increasing the intensity or the amount of a given thing begins to produce the opposite of the effect that it has been producing so far. Beyond a certain point, he argued, our schooling systems end up making us stupider as societies, our health systems end up making us sick, our prison systems end up creating more criminality. Illich brought together a lot of detail to make those arguments, but I think the general principle of the threshold of counterproductivity can be a good tool for thinking with, because it gets us free of thinking in either-or terms, without replacing that with slacker relativism. Instead of debating whether X is a ‘good thing’ or a ‘bad thing’, or claiming that it’s all just a matter of opinion, you can say that, up to a certain point, X tends to be helpful—and beyond that point, it becomes actively unhelpful.

This doesn’t have to be particularly esoteric. Think about food: we can pretty much all agree that food is a good thing, but once you’ve eaten a certain amount, it’s not just that you get diminishing returns, it’s that you’re going to make yourself ill.

CB: I think this is an excellent concept. I think there’s a complete lack, though, of applying anything like this to technology and asking at what point one reaches the level of counterproductivity. I’m thinking of the kind of software that is imposed to track accounting in an organisation: where you used to just get a piece of paper and hand in your receipts and somebody would sort it out, you now have to fill in all kinds of forms online, collecting far more information than used to be the case. Now, as far as the accountants are concerned, this is wonderful: they can analyse exactly what’s going on in the system. The trouble is, you’re getting a huge counterproductivity effect because it becomes so complicated to do something. Now, where in the system can one actually make that decision to say, actually, we don’t want to have that type of accounting system, because the effect on the core activity of the organisation is going to be negative? That’s missing.

DH: Even the possibility of that being a legitimate conversation is missing. But I wonder whether we can look at small, grassroots organisations as spaces within which you don’t have the requirement for the level of measurement and legibility—and, in its absence, certain kinds of human flourishing tend to be more likely to happen than they are in most institutional spaces. Equally, within institutions, you often find pockets within which people seem to be coming alive. If I think of the examples that I’ve experienced, those living corners within larger institutions, what is going on is usually that you have one or two individuals who are smart in a particular way—and they are effectively holding up an umbrella over this human-scale space.

CB: Hiding it!

DH: Yes. They are producing the necessary information to feed upwards and outwards into the systems, to keep the systems off people’s backs…

CB: Strangely, in the business world—where I have half a foot—there’s a whole vast literature about the wonderful energy and passion and creativity that occurs in startups. There’s a slightly smaller literature on what are called ‘skunkworks’, these hidden projects that occur in large organisations that sometimes then emerge as being very important. There are some classic inventions that were invented by somebody working in a big organisation, a little part-time project that they were pursuing out of curiosity, and eventually it becomes their best-seller. Post-It notes is the example that always gets used.

DH: So what you’re saying is, within the business school world, there’s at least a partial recognition of the need for spaces in which the rules are off people’s backs…?

CB: But there’s a cognitive dissonance, because then there’s a whole other literature which develops all these systems for tracking and tracing, Taylorizing and managerialising things.

DH: So, the thing that we’re talking about, when we talk about the kinds of spaces in which people come alive—spaces in which there is room for human flourishing—we’re asserting that one of the characteristics of such a space is that there is less pressure for reality to be reduced to things which can be measured. Things which can’t be measured are allowed to be taken seriously—and, somehow, some individual or group has built an interface to the systems that require measurement, that keeps those systems satisfied, without reducing the activity within the space to activity to satisfy those systems.

So, the thing that goes on within those spaces, I think, is very closely related to what Anthony McCann is getting at when he talks about ‘the heart of the commons’. His starting point is Irish traditional music, the field where he started his studies, and he’s talking about what it is that matters to people within the traditional culture and that goes missing from the version of that culture that you find in the archives of the folk song collectors. Those archives are the legible version of the culture, but you won’t find any people there, and you won’t find the thing that matters most, what it feels like to be there. And when Anthony was here a few weeks ago, he was talking about the need to develop language for this thing that matters. And I guess that means developing a sufficient degree of legibility, so that this is not completely invisible, in order to defend it. In order for it not to be crushed.

And this is where it gets uneasy, especially when we’re talking about business schools trying to put their finger on this. How do you describe ‘the thing that matters’, how do you word it, is it even possible to do so…

CB: Without destroying it?

DH: Yes, exactly. That’s the paradox, isn’t it? What’s the answer?

CB: Well, if you look at many spiritual traditions, they would say that you can’t word it. And you get this in philosophy, Wittgenstein’s ‘Whereof one cannot speak, thereof one must be silent.’

So what could function in the formal world? Again, I’m thinking back to the business literature, where there’s a contrast in this fascination with top managers. There’s this obsession: what gift do top managers have that makes an organisation really work? And they really talk about it as some kind of magical property. ‘We don’t really know what it is, but if we can find somebody who’s got it then we’re going to pay him a lot of money!’ All kinds of people try and describe it, one way and another, but there is a language there about—whoa, this is something that we can’t really measure, but we know it exists.

DH: Maybe it would be interesting to start looking for the different examples from different places where we do seem to have some common vocabulary left for talking about things that can’t be reduced to that which is measurable. I say that, because I listen to you and I realise that this is what you’re doing, with your half a foot in the business world—you’re spotting the places within the literature of a rather unlikely field where there is language for talking about this. And I’ve come across a completely other example in the ideas that I’ve been working with around commons and resources.

To summarise the problem, first: a resource is something that can be measured, something that is seen in the way that those German princes were looking at a forest. There is a very dominant idea, when people talk about commons—whether they’re talking about the ‘information commons’, the environmental commons, or whatever—that what we’re talking about is a pool of common resources. Again, this is something that Anthony McCann has directed attention towards. We are so used to thinking of things in terms of resources—our organisations have Human Resource departments—but a resource is something to be exploited. And there are very few areas of modern existence where we have a good vocabulary for talking about the idea that some things just shouldn’t be exploited. But one place where we have it is friendship. Because when somebody who you thought of as a friend treats you as a resource, you say, ‘I feel used.’ And everyone knows what you mean. It’s an everyday expression, you don’t have to get into a long explanation of why everything shouldn’t be treated as a resource. We still have that as common knowledge.

So I’m getting this idea that there might be a project to document, to gather together, the unlikely mixture of pockets where there is still shared vocabulary for talking about the importance of the part that can’t be measured.

CB: I think that good organisations are aware of this. They are aware of the community that is built within the organisation and the dependence of the organisation on non-contractual relationships, on collaboration, on the ability of people to do favours for each other at a friendship level, even though it’s in a workplace.

DH: This is what David Graeber calls the ‘everyday communism’ which subsidises the world. The whole world system that we’re in, which might look like this globalised, capitalist, neoliberal system, would grind to a halt without the everyday communism of people doing things for each other without asking, ‘What’s in it for me?’

CB: So good organisations recognise this. The thing is, because it’s not measured, because it’s not worded, it’s often difficult to defend. It can be very simple things, like cancelling Christmas parties. Good managers know that the best way to get their team to work is to take them out, get them all drunk and next time they meet have the problems will be solved!

DH: That sounds like a rather culturally-specific approach to management…

CB: There are certainly variations on the theme! But the basic concept applies. And that’s really creating a space for illegible activity, illegible conversations, which then can break down barriers, can break down categories and solve problems. But that needs to be defended, because it’s very easy for the Christmas party or the social space to be the first luxury that gets cut.

Where these things work, it’s very often, as you say, those particular people who create an umbrella, who create a protected space. So perhaps there is an opportunity there to try and teach people who are in positions of leadership that there is a particular aspect which has to do with creating these special spaces. Developing a language for that can make them work much better as organisations.

DH: There is a difficulty here—and I suppose one way into talking about it might be through a lovely story that I came across. I met a guy who had been the managing director of a company with about 200 people working for him. The company was taken over and the nature of the takeover was such that this company was going to be wound down, none of these people were going to work for the new company, but everyone continues to be employed for the next year until the process is complete, with relatively little to do. And he thought, what are we going to do, for the next year? And, in particular, so that these people are in the best position possible to go off and find new work or do whatever it is they do next. And he decided that they would just get out on the table everything that people were interested in, the things that they did, the things that they had never told anybody at work about, that were really important to them in their lives—and just get everyone’s skills and talents and ideas out there, and see what would happen as they started matching things up together. And he said it was the most amazing year of his career—and I could see it, in the way he talked about it.

So, here’s the problem: things can become amazing when we can bring more of ourselves into the workplace, the school, whatever space it is that we spend most of our waking hours. But… the shadow side of this is that, you’ve talked about Taylorist management and we think of Henry Ford and the production line, that whole model of 20thcentury capitalism, but there is this whole other thing of the post-Fordist, post-industrial economy, where we are absolutely expected to bring more of ourselves to work, because it’s not just our bodies but our emotions that our employers want to use as resources.

Once upon a time, I used to work as a corporate spy. Well, really I was a struggling freelance radio journalist, taking bits and pieces of other work to pay the bills, and every few weeks I used to get paid to go and sit in Starbucks, drink a coffee, make various observations, then buy a takeout coffee and run round the corner to weigh it and take its temperature, and fill out a twelve-page form that went back to Starbucks headquarters to tell them how their staff in this local branch were doing. The bit that sticks in my mind was that, not only did I have to stand in the Starbucks queue with a stopwatch in my pocket, timing the process and remembering whether the staff were following the different stages of the script—I also had to report whether, over and above the script, I had observed staff recognising and acknowledging regular customers and initiating spontaneous conversations. So Starbucks was essentially trying to write the computer program for how you simulate, systematically, the friendly local cafe, only with precariously employed staff who have no real connection to the place they are working and are at the mercy of this mystery shopper who is sent once a month to check up on them.

At the same time, I was living around the corner from an independent cafe run by a couple of old hippies who were seriously grumpy. You went in there for the first time and you’d get a good cup of coffee, but they would be surly with you. If you kept coming back, though, they would begin to acknowledge your existence and you would begin to notice that they had their regulars who got to sit at the table at the back and play chess with them and decide what music went on the stereo and smoke a joint together when it was quiet in the afternoon.

And I realised that the one thing that Starbucks couldn’t attempt to simulate was customer unfriendliness—and that there was something about this customer unfriendliness that felt right. If we think in terms of thresholds, again, maybe it’s kind of OK to use money to pay for a good cup of coffee, but if I’m paying for someone to act like they’re my friend, we’ve crossed one of those lines?

But this is the thing: we want to create spaces in which we can bring more of ourselves to what we do, in which we can be more than just getting through the day, trying to avoid drawing any unwanted attention and trying to satisfy the requirements of the system that we’re plugged into—yes, we want to be doing something more meaningful with our lives, bringing more of ourselves into the situation. But if we try to make legible ‘the stuff that matters’, the stuff that would bring more of ourselves into the situation, how do we do it without it becoming another resource to be harnessed, allowing the extension of exploitation even further?

CB: I don’t know. I think that’s a real danger and I think, equally, in creating the vocabulary that we might develop, we are in danger of formalising it. And even if our terms are very vague and abstract, somebody will say, ‘Ah, here I have a collection of categories and I will construct a form or an application that will then be used to describe that.’ Just like your Starbucks example: is the barrista behaving like he is happy?

DH: Yes, they create the model and then they try to run the model in a way that is as convincing a simulation as possible, whilst extracting as much…

CB: The aspect of this creation of models that we haven’t really addressed, but is intimately related here, is that we create the models and then we believe the models and don’t believe reality. We see this everywhere around us. The classic phrase is ‘All models are wrong, but some models are useful.’ Models don’t represent reality perfectly: a perfect representation of reality would be a cloned copy. Necessarily, they are abstraction and simplification, in order to be useful. The trouble is, we create a model, typically today on a computer screen, and then what we see in front of us on the screen becomes ‘reality’.

My favourite example is a parcel I sent in Britain by Special Delivery, guaranteed to arrive the next day. It didn’t arrive. I rang the Post Office the following day and said, ‘My parcel didn’t arrive.’ The Post Office employee said, ‘Yes it did, it says so on my computer.’ And at that moment, the friend to whom I had sent it rang me on my mobile and said, ‘The postman is just coming and giving me your parcel now!’ I told this to the Post Office employee on the other line and she said, ‘No, that’s not possible, it was delivered yesterday.’

DH: So that’s the most absurd version of believing the model, rather than the evidence of our experience. But your point is that this is a pattern.

CB: It’s systematic and it’s very dangerous, because you get a completely warped view of reality, very often. Reality changes, human beings change, and if your model doesn’t change appropriately, you fall into all kinds of pits. Governments collect the wrong data, or miss some major social change that is completely outside their perception at every level. Equally, in organisations, you have this problem. So, as part of the development of this language that we’re talking about, we also need to develop a language for making people aware of the limitations of the model. Making people aware that the model is useful within these limits, but there may be more, and to have a look outside the window occasionally to see what else there is.

DH: So, this brings us back to the idea of treating the vagueness and imprecision of language as a feature rather than a bug. As not a problem that we need to try and get rid of, but actually as something helpful.

You mentioned Wittgenstein’s line about remaining silent—and the way that different religious traditions have treated the thing that matters most as incapable of being put into words. But religions haven’t exactly remained silent! One thing that does go on within religious language is the deliberate multiplication of language to keep in mind its inadequacy. So you have the hundred names of God, because one way of speaking about the unspeakable is by never forgetting that none of the language that you use is adequate to it. And, in some ways, we need a hundred names for everything! This, again, recalls something that Anthony talks about—lifting up the words and looking underneath—and I think that part of the way that you avoid forgetting to do that is by using a multiple vocabulary. Rather than using one word all of the time, at which point that word quickly comes to be treated as if it’s a perfect match.

So perhaps that’s one principle that we could try to use?

CB: It sounds like a very good starting point. The challenge is making that acceptable, socially. There is a long history in the religious traditions that you’re talking about of the difficulty of language and the difficulty of spiritual concepts—and that this is OK. You know, if you look at the Talmudic traditions of interpretation, it is OK to struggle with concepts, that is a valid task. Whereas the modern tendency is to want to simplify, to take away effort, to take away difficulty all the time.

Nearly every Computer Science paper will start off by saying ‘We wish to reduce the effort involved in doing X and here is the method…’ That’s the basic principle by which you do research. We actually need to write new Computer Science papers which start ‘We are now increasing the effort to do X because this will have beneficial effects.’ I have suggested this in the past, under the heading of ‘slow computing’.

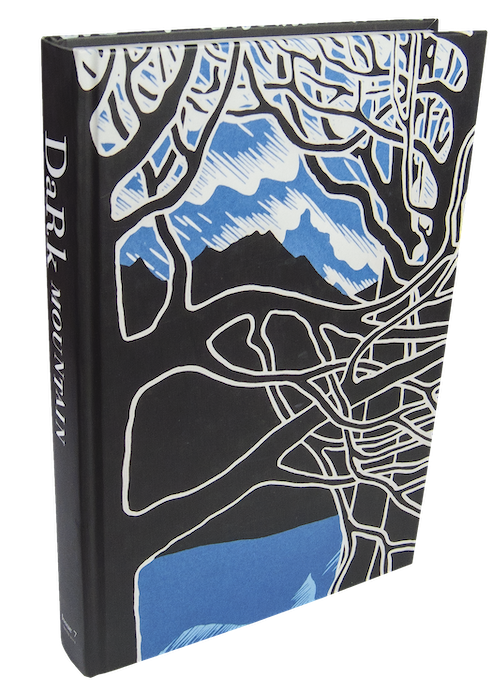

Based on our discussion during the Västerås Conversations, this text was first published in Dark Mountain: Issue 7.